8. Ethics

8.1. Subject to Harm: Coercion, Deception, and Other Risks for Participants

Learning Objectives

- Define human subjects research and describe its differences with non-human subjects research.

- Describe and provide examples of the various ways that research can harm human subjects.

Ideally, all types of research are limited by various sets of ethical concerns. However, research on human subjects is regulated much more heavily than research on nonhuman subjects. According to the U.S. Department of Health and Human Services (2017:7260), a human subject is a “living individual about whom an investigator … conducting research”:

- “Obtains information or biospecimens through intervention or interaction with the individual, and uses, studies, or analyzes the information or biospecimens”; or

- “Obtains, uses, studies, analyzes, or generates identifiable private information or identifiable biospecimens.”

A couple of things are worth noting about the federal policy that oversees human subjects. First, it explicitly states that its regulations apply to anyone conducting research—“whether professional or student.” Second, even if the researcher does not interact directly with the individual, the recording of any identifying details—which may undermine the individual’s privacy and safety—necessitates greater care on the part of the researcher to uphold ethical practices.

Early trials of medical vaccinations provoked wide-ranging public debates over the ethics of conducting potentially dangerous research on human subjects (Rothman 1987). In 1796, scientist Edward Jenner exposed an eight-year-old boy to a virus related to smallpox in order to develop a vaccine for the devastating disease (Riedel 2005). Jenner believed the dangers posed to the child were outweighed by the need to find a cure—an endeavor that he argued would benefit the whole of humanity. After the exposure, the boy fell sick with a fever and then chills, but within days, he felt much better. Then Jenner exposed the child again—this time, to smallpox. This time, nothing happened: the vaccination worked. While Jenner was exceedingly lucky that his human subject in this case did not suffer long-term harm, things could easily have turned out far worse.

From a modern perspective, subjecting another human being to this level of danger would be unacceptable and unnecessary for a vaccine trial. It would violate a core principle of research ethics: to do no harm to participants. In rare cases, putting participants at risk of harm can be justified—such as when a new and experimental medical treatment is being tested—but the benefits of such risks must be substantial, and the approach taken should be a last resort, used only when alternatives have failed or are not possible. For this reason, new vaccines and other treatments are typically tested upon animal subjects (which themselves deserve ethical consideration) before researchers move onto humans. Even then, there are hard lines that prevent researchers from endangering participants.

Starting largely in the 20th century, public anger and horror over serious breaches in research ethics led to the development of rules—established both by government laws and professional norms—to rein in bad scientific behavior. Yet even when such rules existed, there was little accountability. At the end of World War II, Nazi doctors and scientists were put on trial by the Allies for conducting human experimentation on prisoners. Germany had already established regulations specifying that human subjects must clearly and willingly consent to their participation in medical research, a principle known as informed consent. Nazi scientists utterly ignored these regulations, however. They saw the human subjects forced to participate in their gruesome experiments—concentration camp inmates—as less than human and thus not deserving of protection.[1]

With no checks on their capacities for cruelty, Nazi scientists tortured and murdered thousands—not just political prisoners, but also Jewish, Polish, Roma, and LGBTQ individuals singled out as especially undesirable (Weindling et al. 2016). On an even larger scale, Japan’s infamous Unit 731 engaged in lethal experiments—ranging from exposing patients to frostbite, diseases, and biological weapons, to performing vivisections and amputations—which were conducted on men, women, and children, most of them Chinese and Russian.

After Germany surrendered, the Allies prosecuted some of the doctors who had conducted human experiments at Auschwitz, Dachau, and other concentration camps. One result of these tribunals was the creation of the Nuremberg Code, 10 research principles designed to guide doctors and scientists who conduct research on human subjects (see the sidebar The Nuremberg Code below). This code would inspire later rules adopted by numerous national governments and professional bodies. As for Japan’s even more egregious research program, the U.S. government deliberately concealed its knowledge of Unit 731’s activities so that war crimes prosecutors were unable to try the scientists responsible. U.S. general Douglas MacArthur secretly gave immunity to the researchers in order to gain full access to what was seen as valuable data—in addition to keeping Japan’s horrifically acquired knowledge about biological weapons away from the Soviets.

Even as Allied nations learned about the extent of the Axis’s human experimentation and established ethical guidelines in response, they remained hypocritically willing to ignore the transgressions of their own researchers—including those working for government agencies. Between 1932 and 1972, for example, the U.S. Public Health Service oversaw the Tuskegee Syphilis Study, one of the most notorious medical studies ever conducted. The researchers recruited 600 African American sharecroppers from impoverished areas of Macon County, Alabama, promising them free medical care to be part of the study. Among the recruited participants, 399 men had latent syphilis but were not told of their condition. The researchers wanted to study the effects of the disease when it was left untreated, so they set up an elaborate ruse, giving the men false treatments for “bad blood” while comparing their outcomes to those of the study’s control group, the 201 men without syphilis. The researchers continued to deny the men effective care even after penicillin became a widely available treatment in the 1940s.

A leak to the press finally forced officials to shut down the experiment in 1972. By then, the failure to provide actual treatment over four decades had already brought about the deaths of 128 of the men from syphilis or related complications.

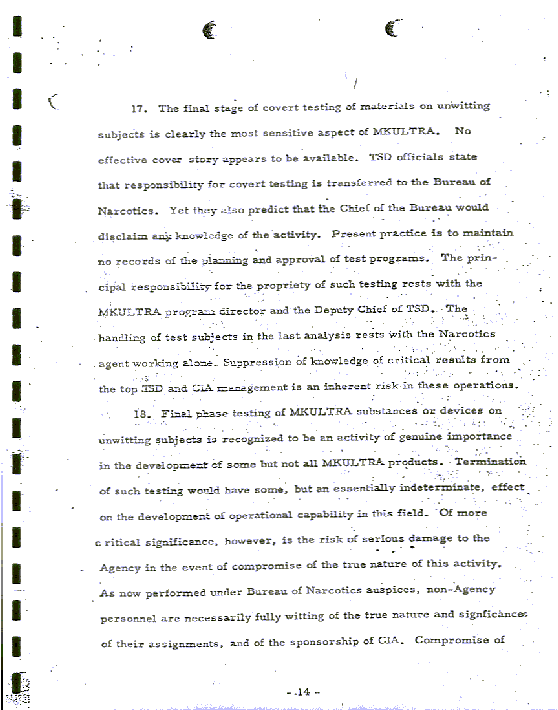

Inspired by experiments on mind control conducted by Japanese and Nazi doctors during World War II, the U.S. Central Intelligence Agency embarked in 1953 on a two-decade research initiative called Project MKUltra (also known as MK-ULTRA). According to journalist Stephen Kinzer (2019), the covert CIA project was not only “roughly based” on those earlier experiments, “but the CIA actually hired the vivisectionists and the torturers who had worked in Japan and in Nazi concentration camps to come and explain what they had found out so that we could build on their research.” For example, Nazi scientists who had experimented with mescaline at the Dachau concentration camp were brought aboard as advisors, Kinzer reports. The CIA also recruited Nazi doctors to lecture officers about “how long it took for people to die from sarin”—a poison gas the Nazis had also studied.

As part of MKUltra, CIA officers set up secret detention centers throughout Europe and East Asia where they experimented on captured enemy agents, testing not only drugs but also electroshock, extremes of temperature, sensory isolation, and harsh interrogation techniques meant to break down the ego. Government investigations into MKUltra finally put an end to the CIA program in the 1970s, though the CIA successfully destroyed most of the documents related to the project before investigators could obtain them.

Both the Tuskegee study and the CIA’s Project MKUltra caused unconscionable harm to participants and failed to obtain their informed consent. The consequences of these betrayals of basic ethical principles continue to reverberate today. The ensuing scandal over the Tuskegee experiment led to the creation of the National Commission for the Protection of Human Subjects of Biomedical and Behavioral Research (discussed further below), a U.S. federal agency devoted to regulating research. In spite of the reforms that ensued, the Tuskegee experiment is still brought up—many decades later—as a critical historical context for why many African Americans have a deep distrust of the medical establishment (Katz et al. 2008). MKUltra prompted a series of lawsuits against the federal government, with research participants and their survivors calling out the CIA’s flagrant use of deception and coercion. And thanks to the agency’s brazen document destruction, Project MkUltra still feeds wide-ranging conspiracy theories about the federal government to this day.

The Nuremberg Code

In 1947, the Nuremberg war crimes tribunal laid down 10 principles for “permissible medical experiments,” which would become known as the Nuremberg Code (Vollmann and Winau 1996):

The great weight of the evidence before us is to the effect that certain types of medical experiments on human beings, when kept within reasonably well-defined bounds, conform to the ethics of the medical profession generally. The protagonists of the practice of human experimentation justify their views on the basis that such experiments yield results for the good of society that are unprocurable by other methods or means of study. All agree, however, that certain basic principles must be observed in order to satisfy moral, ethical and legal concepts:

1. The voluntary consent of the human subject is absolutely essential: This means that the person involved should have legal capacity to give consent; should be so situated as to be able to exercise free power of choice, without the intervention of any element of force, fraud, deceit, duress, overreaching, or other ulterior form of constraint or coercion; and should have sufficient knowledge and comprehension of the elements of the subject matter involved as to enable him to make an understanding and enlightened decision.

2. The experiment should be such as to yield fruitful results for the good of society, unprocurable by other methods or means of study, and not random and unnecessary in nature.

3. The experiment should be so designed and based on the results of animal experimentation and a knowledge of the natural history of the disease or other problem under study that the anticipated results will justify the performance of the experiment.

4. The experiment should be so conducted as to avoid all unnecessary physical and mental suffering and injury.

5. No experiment should be conducted where there is an a priori reason to believe that death or disabling injury will occur; except, perhaps, in those experiments where the experimental physicians also serve as subjects.

6. The degree of risk to be taken should never exceed that determined by the humanitarian importance of the problem to be solved by the experiment.

7. Proper preparations should be made and adequate facilities provided to protect the experimental subject against even remote possibilities of injury, disability, or death.

8. The experiment should be conducted only by scientifically qualified persons. The highest degree of skill and care should be required through all stages of the experiment of those who conduct or engage in the experiment.

9. During the course of the experiment the human subject should be at liberty to bring the experiment to an end if he has reached the physical or mental state where continuation of the experiment seems to him to be impossible.

10. During the course of the experiment the scientist in charge must be prepared to terminate the experiment at any stage, if he has probably cause to believe, in the exercise of the good faith, superior skill and careful judgment required of him that a continuation of the experiment is likely to result in injury, disability, or death to the experimental subject.

Ethical Questions about Social Science Research

When regulators talk about putting safeguards on scientific research, they often distinguish between biomedical and behavioral research. The latter group includes the social sciences in general and sociology in particular. Unethical biomedical studies are especially troubling due to their potentially lethal consequences. Yet morally dubious research in the social sciences also occurs. Even if human subjects face no mortal threat, they can be subjected to distress or other psychological harm as a result of their participation. For this reason, governmental agencies, institutional review boards, and other regulatory bodies routinely scrutinize behavioral studies for possible risks to their human subjects, even though the stakes of such research are generally lower than for biomedical studies.

Among the various social scientific methods of research, experiments often entail the most intrusion into the lives of human participants, given that by definition they are not just about observing social interactions, but manipulating those interactions to study the consequences (we’ll have more to say about the differences between experimental and observational data in Chapter 12: Experiments). As a result, some of the most well-known instances of problematic research in the social sciences comes from the field of psychology, which relies heavily on lab experiments.

Starting in 1959, Harvard psychologist Henry Murray began a multi-year experiment on acute stress that involved 22 undergraduates at his university. The students were told they would debate philosophy with a fellow student. In preparation, they wrote essays about their closely held beliefs and aspirations. Without their knowledge, Murray had a litigator look over their essays and then sit down with each student to grill and belittle them. Electrodes attached to the subjects measured their physiological responses. The critic’s “vehement, sweeping, and personally abusive” attacks were intended to break down their egos and generate extreme distress, Murray said. The students then repeatedly watched film recordings of their frightened and angry reactions during the abuse sessions.

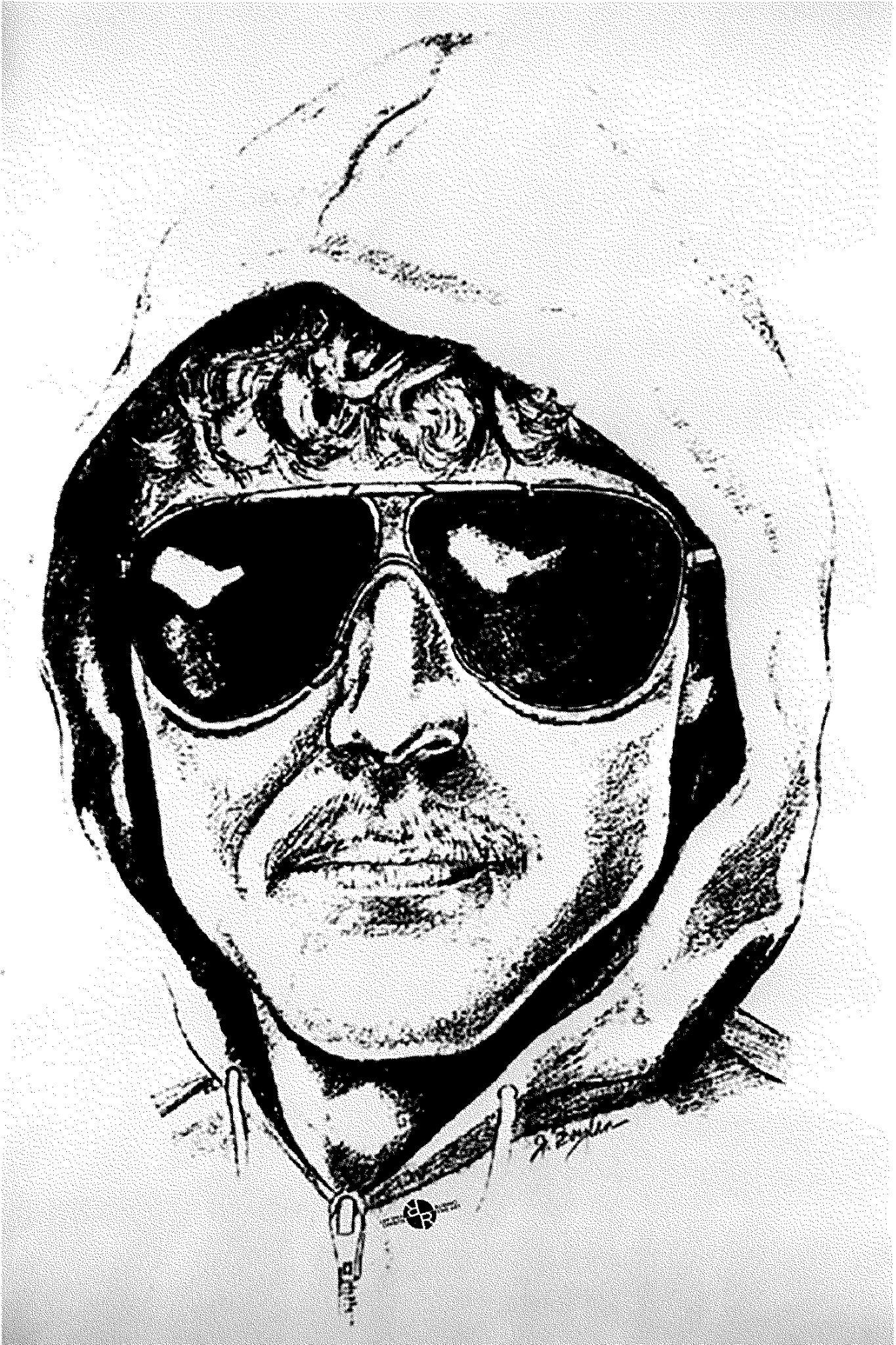

One of the subjects in Murray’s study was a 17-year-old sophomore named Ted Kaczynski, a brilliant but socially awkward math student who had skipped 11th grade to attend Harvard a year early. Journalist Alston Chase describes the experiment as “purposely brutalizing” and argues that it set Kaczynski down a dark path. Until Murray’s study ended in 1962, Kaczynski attended weekly sessions—200 hours in total—so that Murray could measure the impact of sustained abuse and humiliation on his subjects.

Kaczynski graduated from Harvard, obtained his PhD, and began a promising career as a math professor at the University of California, Berkeley. But in 1969, he abruptly resigned, having become—in his department chair’s words—“almost pathologically shy.” He moved out to a remote cabin in Montana to live as a survivalist. There, Kaczynski came to see technology and industrialization as the enemies of human freedom and dedicated himself to avenging the harm they had wrought on the natural world. He began sending mail bombs across the country, at first targeting engineering professors and the president of United Airlines—which prompted the FBI to use UNABOM (Universities and Airlines) as his case file name. The Unabomber was finally captured in 1996, but not before his terror spree killed three people, injured and maimed 23, and forced the Washington Post to give into his demand to publish his 35,000-word anti-technology manifesto.

Perhaps the most cited (and even parodied) example of unethical psychological experimentation is a series of studies conducted by Stanley Milgram (1963, 1974) at Yale University to understand obedience to authority. Somewhat ironically, given how much criticism the ethics of his study would face, Milgram’s goal was to explore whether good people could be convinced to do evil things—a moral question made especially salient by the high-profile trial underway around that time of Nazi war criminal Adolf Eichmann. As Milgram would later write in a 1974 book on his experiments, “Could it be that Eichmann and his million accomplices in the Holocaust were just following orders? Could we call them all accomplices?”

In 1961, Milgram recruited 40 young and middle-aged men with diverse occupations and levels of education as his research subjects. They took on the role of “teachers” in an experiment that was supposed to test the ability of a “learner” to memorize word pairs. The teacher and learner were separated by a wall so that they could not see each other but could hear what the other said. In each iteration of the experiment, the learner would be strapped to an electric chair with a shock generator. When the learner failed to remember a word pair, the subject was told to press a button to shock them. The severity of the shocks began at a low voltage and then increased with each new error. The machine could deliver shocks between 15 and 450 volts—explicitly labeled from “Slight Shock” to “Danger: Severe Shock” on the generator. If the subject hesitated to deliver a shock, the attending researcher—dressed in a white lab coat to signal their authority—would insist that they continue, becoming more demanding if the subject did not obey. As the shocks increased in intensity, learners would cry out, at some points banging against the wall in protest (if asked, the researcher would reassure the teacher that the shocks were not causing permanent damage). In later versions of the experiment, learners would even plead for mercy and reveal they had a heart condition. At the highest voltages, the learners would fall silent.

In reality, the electric shocks were not real, and the “learners” were actually actors (in lab lingo, confederates of the researchers) who were only pretending to be in pain. Milgram had expected only a small minority of participants to apply a severe shock, in line with the predictions of the students and other faculty he polled prior to the experiments. He was startled to find that every participant in his study went up to 300 volts, and two-thirds allowed the shocks to reach the maximum 450 volts. All of the participants paused the experiment to raise questions about it, but none of them—even those who refused to proceed to the highest voltage—demanded that the study be ended.

Although the learners only pretended to be in pain, many of Milgram’s research participants experienced emotional distress during the experiment, with some falling into fits of nervous laughter and even seizures (Ogden 2008). Milgram later said that most of the participants expressed gratitude for being part of the study. Nevertheless, some of his peers condemned the study for the psychological harm it potentially caused both at the time of the experiment and over the long term. The realization that you are willing to administer painful and even lethal shocks to another human being just because someone who looks authoritative has told you to do so might indeed be traumatizing—even if you later learn that the shocks were faked. After Milgram’s original 1963 paper came out, a number of replications and variations of his study were conducted (including by Milgram himself) and arrived at similar results. Yet the fierce criticism of the morality of Milgram’s experiments led to widespread discussion among psychologists about the potential risks of their work and ultimately brought about an overhaul of the discipline’s prevailing ethical standards.

While perhaps less widely known, a number of nonexperimental studies have also led to ethical scandals. Around the same time as Milgram’s experiments, sociology doctoral student Laud Humphreys (1970) was collecting data for his dissertation research, which employed the qualitative method known as ethnography (discussed in Chapter 9: Ethnography). Humphreys was studying the “tearoom trade,” the practice of men engaging in anonymous sexual liaisons in public restrooms. He was trying to understand who the men were and why they sought out these encounters. During his research, he conducted participant observation by serving as a “watch queen,” the person who keeps an eye out for police in exchange for being able to watch the sexual encounters.

Humphreys spent months with his subjects, getting to know them and learning more about the tearoom trade. Without their knowledge, he wrote down their license plate numbers as they pulled into or out of the parking lot near the restroom he was observing. With the help of some collaborators who had access to motor vehicle registration information, Humphreys used the plate numbers to find out the names and home addresses of his research subjects. Again without their consent, he went to their homes unannounced, disguising his identity and claiming he was a public health researcher. Under that pretense, he interviewed the men about their lives and their health, never mentioning his previous observations.

As you probably surmised, Humphreys’s study violated numerous principles of research ethics. He failed to identify himself as a researcher who was studying sexual encounters. He intruded on his participants’ privacy by obtaining their names and addresses and approaching them in their homes. He deceived them by claiming he was doing a public health study and not acknowledging any connection to his earlier observations. In spite of all these ethical breaches, the research that Humphreys conducted arguably did a lot of good. For one thing, it challenged myths and stereotypes about who was engaging in these sexual practices. Humphreys learned that over half of his subjects were married to women, and many did not identify as gay or bisexual. This knowledge helped decrease the intense social stigma at the time surrounding “men who have sex with men,” an umbrella term coined to include the latter group (Lehmiller 2017). Humphreys’s study even reportedly prompted some police departments to discontinue their raids on public restrooms and other places where anonymous sexual liaisons were taking place.

After its publication, however, Humphreys’s work became very controversial, opening up a still ongoing discussion in the discipline about the appropriate amount of informed consent and protection of privacy that ethnographers should ensure in order to do their work both effectively and ethically. Some scholars called the tearoom trade study unethical because Humphreys misled his participants and put them at risk of losing their families and their positions in society, given the stigma associated with gay sex (Warwick 1982). His defenders pointed out that the sexual encounters he observed occurred in public places with no legitimate expectation of privacy. And the benefits of Humphreys’s research—namely, the way it dispelled myths about the tearoom trade specifically and human sexual behavior more generally—outweighed any harm it may have caused, they said (Lenza 2004).

Looking back almost four decades after his study was published, Humphreys (2008) maintained that none of his research subjects had actually suffered due to his study, and that the “demystification of impersonal sex” had been of benefit to society. Over the years, however, Humphreys had also come to the conclusion that he should have conducted the second part of his study differently. Instead of writing down license plate numbers to track down his subjects at home and lying to them in the guise of a public health researcher, Humphreys could have spent more time in the field, he wrote. By doing so, he could have gained the trust of those he was studying and enlarged the pool of his informants. Then he could have divulged his true identity and sought their consent for his use of their data.

Today, sociologists would find it extremely difficult to conduct a project similar to Humphreys’s. When he did his fieldwork, an effective regulatory framework for policing scientific research was not yet in place, and sociology and other disciplines had not yet defined their standards for ethical research in a clear and comprehensive fashion. As we will discuss later in the chapter, these regulations and professional norms mean that researchers must design their studies in very specific ways to protect their human subjects. At the same time, we should note the gray areas that exist even with today’s more enlightened approach. Ambitious and important studies can create risks for subjects, but they can also be defended with ends-justify-the-means arguments—namely, that the benefits of the research outweigh its risks, like Humphreys’s defenders said his study did. Where to draw the line is a topic of constant debate, and the consensus may shift with each new generation of researchers—as our modern-day horror over studies that seemed appropriate to researchers decades ago may remind us.

Key Takeaways

- Because in sociology we deal primarily with human research subjects, sociologists face particular challenges in conducting research ethically.

- The history of science (including the social sciences) includes numerous examples of highly problematic research that harmed or otherwise negatively impacted human subjects.

Exercises

- Do you believe Humphreys’s research methods were ethically wrong? Or were they justifiable due to their larger (arguably positive) impacts?

- How would you change Humphreys’s research methods to make them more ethically acceptable?

- In addition to Jewish communities, Nazi ideology dehumanized those with mental and physical disabilities, ethnic minorities, and those who did not conform to heteronormative standards. Richard J. Evans (2006) discusses how the Nazis promoted forced sterilization and involuntary euthanasia, while George J. Annas and Michael A. Grodin (1995) document how the unethical practices of Nazi doctors and scientists informed the development of the Nuremberg Code. ↵